Every ML Engineer encounters this moment at some point: the model trains smoothly; metrics are perfectly aligned, but performance drops in production. After checking, everything was working fine. The major cause of the problem is: Features. That’s why in Machine Learning pipelines; Feature engineering is the least appreciated and most important step. It determines what the model is allowed to know and see, which patterns it can detect, and whose signals it can ignore forever.

This is why machine learning engineers spend more time on engineering features than fine-tuning algorithms. Feature engineering is not data cleaning; rather, it is model design through data representation. The career growth in Machine Learning is at its peak. Did you know that, as per ZipRecruiter, the average salary of a Machine Learning Engineer is $128,769/year in 2026? Gain complete insights into the feature engineering in 2026.

What is Feature Engineering in Machine Learning?

Feature Engineering is the task of selecting, transforming, creating, and optimizing input variables (features) to be in the most efficient form of data so that ML models can learn from it.

In the real world without ML:

That's why even changing algorithms (from linear models to XGBoost or deep learning) usually contributes less to increasing an ML model's performance than a feature engineering approach.

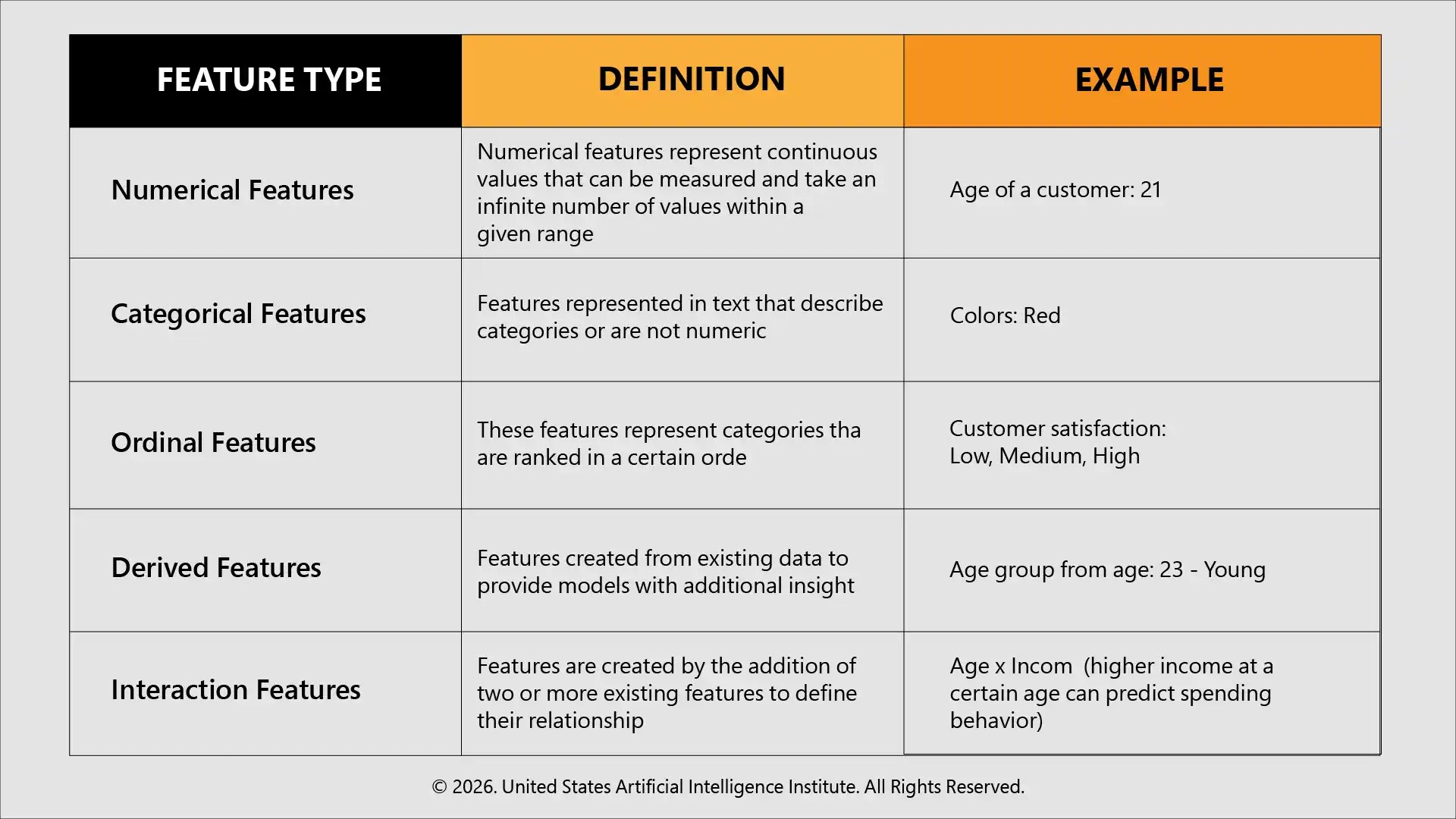

Major Types of Features in Machine Learning

Here are the major types of features in Machine Learning in 2026:

1. Pandas

Pandas are the most significant part of engineering construction. For example, it is used for data cleaning, adjusting for missing values, the extraction of features, binning, aggregation, and the creation of derived features. Most of the engineering construction techniques start here.

2. NumPy

NumPy supports quick numerical transformations, mathematical operations, and the manipulation of features based on arrays. It is also extremely important when dealing with large-scale numerical features.

3. Scikit-Learn

Among all other libraries, Scikit-learn features the most structured and comprehensive engineering construction features. It offers:

4. Advanced and Specialized Libraries for Feature Engineering

5. Deep Learning and Big Data Ecosystem

What are Feature Engineering Techniques?

Feature engineering procedures are organized approaches developed to transform raw data into aggregatable and interpretable features that can be used for initiating a model. These methods determine how effective Machine learning models are at understanding patterns, extracting noise, and generalizing unseen data.

Let’s explore the various Feature Engineering techniques.

1. Handling Missing Values

Real-world data is incomplete. If not handled properly, missing data may cause the learning to be distorted. Some common ways are filling values with some kind of statistical value (such as mean/median), forward/backward fill for time-based data, and discarding rows when you lose only a handful of them. The objective is to maintain the integrity of the data without inducing bias.

2. Encoding Categorical Features

Machine learning models operate on numbers, not text. We have to transform the categorical attributes (like type of product, location) into numeric. Encoding methods enable the categories to be encoded without misleading relations that don’t exist.

3. Feature Scaling

Numerical attributes are frequently measured on different scales. Scaling is to prevent something from overwhelming something to a certain level of pixels. This is essential for distance-based and gradient-based models and is such a common step in most ML pipelines.

4. Feature Creation (Derived Features)

New features are generated from existing ones to bring out hidden patterns. An example is dividing values or pulling elements out of dates. Derived features often benefit the model more than raw inputs.

5. Feature Selection

Not all features are useful. The process of characteristic selection removes irrelevant or redundant variables and thus can reduce “over-fitting” while also enhancing model interpretation and performance.

Machine learning engineers consistently face:

These challenges are why feature stores and certification-backed ML training exist today.

Begin your feature engineering learning today with the best machine learning certification. From leakage prevention to scalable pipelines, the right certification equips machine learning engineers with real-world Feature engineering techniques and methodologies that directly improve model reliability and production success.

Explore more: Why Text Classification is a Must-Have AI Skill in 2026

Wrap Up

Exploring Feature engineering not only helps in upskilling in the domain of Machine Learning but also helps you see the difference between how ML models work with or without the utilization of Feature engineering.

Algorithms optimize—but features define reality. Everything you transform shapes what your model can learn and predict. If you need Machine learning models that live beyond experiments, feature engineering will have to become a deliberate engineering discipline, not an afterthought.

Master feature engineering in 2026, to develop, train, and deploy Machine learning models that are not just well-trained but also smarter.

Follow us: