Once a machine learning model is created, it is only part of the solution: deploying the model is a significant part. However, it is the case that most organizations find it difficult to take models off the experimentation stage. A 2025 Gartner report shows that 85 % of ML models never made it to production, and this is because of inconsistent workflows and deployment issues.

Devices such as Docker are also serving to reduce this gap by allowing more reliable deployment of models, offered through consistent, portable environments. Combining Docker, Scikit-learn, and Flask with Python will allow Machine Learning Engineers to increase the number of prototypes of their code on a global scale.

Why Use Docker for Machine Learning Model Deployment?

It is also necessary to realize why Docker is so common in the deployment of ML models before leaping into the process.

Docker helps bundle an application and its dependencies into a container. This helps the model to behave identically, without variation, when the application is running on a development machine, a cloud, or a final production server. Docker containers are scalable, portable, and lightweight, and, therefore, reliable for implementing the AI models.

Overview of the Model and Stack

This blog will focus on a machine learning model that will be built using Scikit-learn. Scikit-learn is one of the most widely used machine-learning libraries for Python. The model will be trained using the classic Iris dataset and will make predictions on the species of a flower based on its input features.

We will use the following tech stack:

This is a typical stack to start your technology journey in ML engineering or to complete your machine learning certifications.

Step 1: Prepare Your Environment

Before you can start, confirm you have Docker installed on your machine. You can download and install Docker Desktop via the official Docker website. This is important because the entire workflow will be done within a Docker container.

Step 2: Train and Save the Model Using Scikit-learn

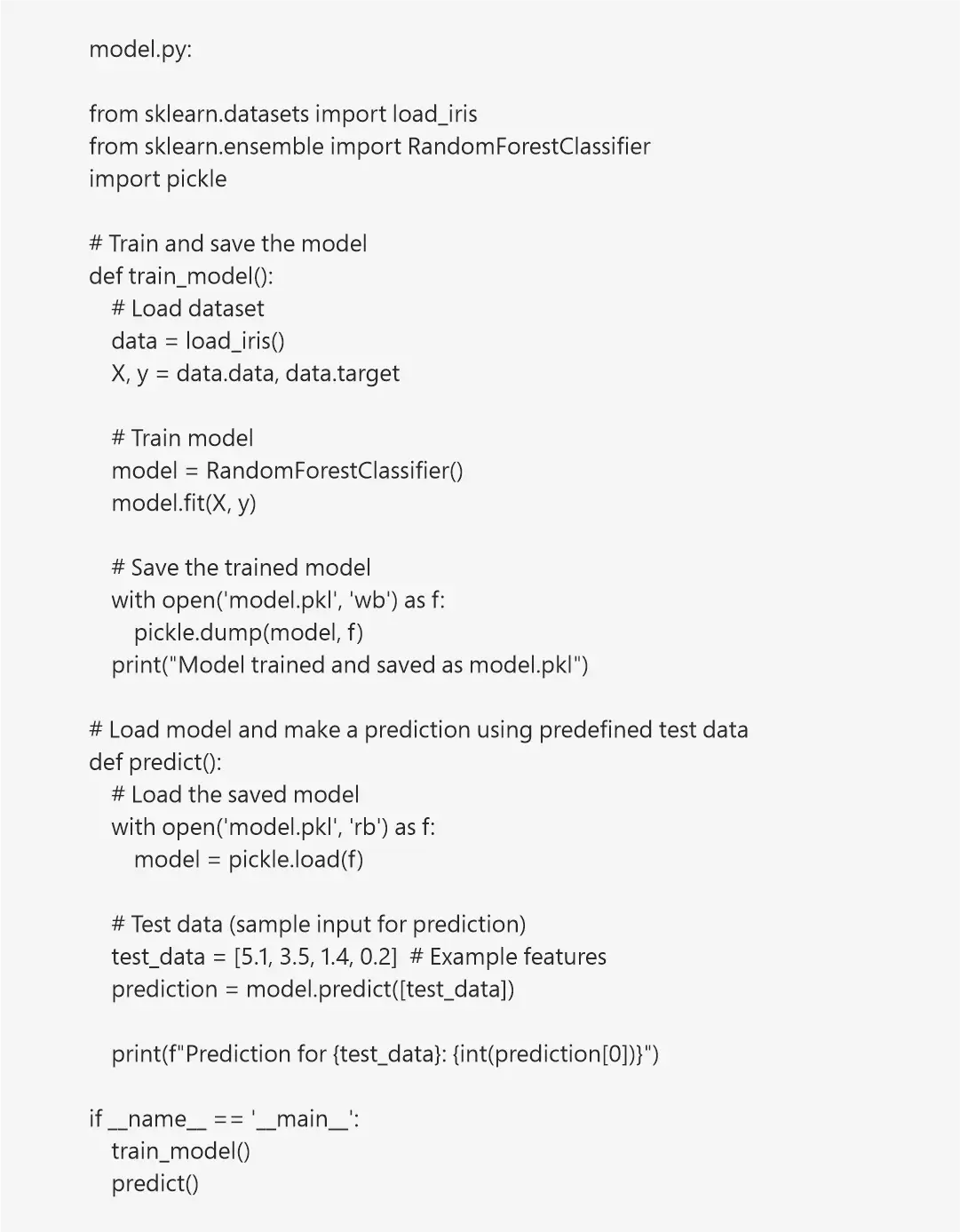

Create a Python script named model.py to train a Random Forest classifier on the Iris dataset. The model is saved using Python’s built-in pickle module for later use.

This script does two things: it trains the model and saves it, and then loads it back in for a prediction to validate it.

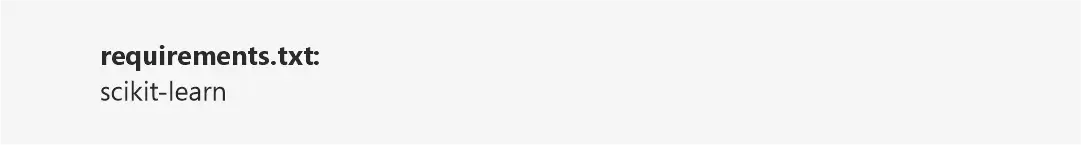

Step 3: Describe Dependencies in requirements.txt

To ensure a consistent environment inside Docker, list the necessary Python packages in a

This file will be used during the Docker image build to install the required packages.

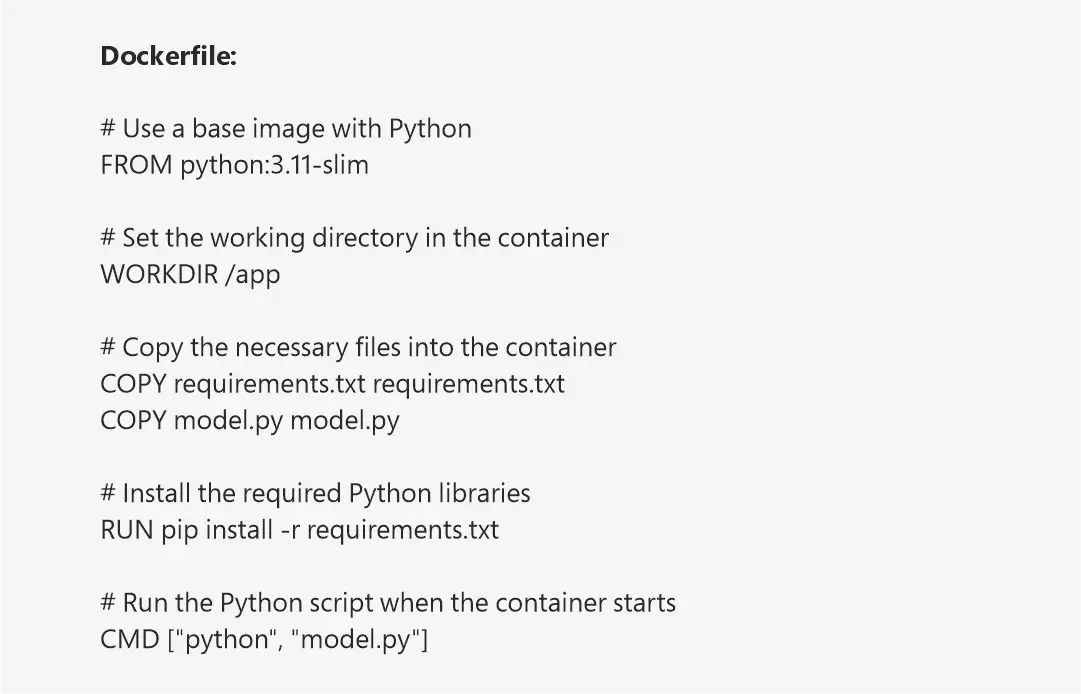

Step 4: Create a Dockerfile for Containerization

Next, create a Dockerfile to define the container's setup. This file tells Docker how to install dependencies and run your Python script.

This Dockerfile ensures the model training and prediction run automatically when the container is launched.

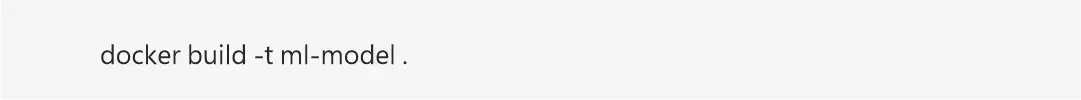

Step 5: Build the Docker Image

Open your terminal or command prompt, navigate to the directory containing your files, and build the Docker image using the following command:

Step 6: Run the Docker Container

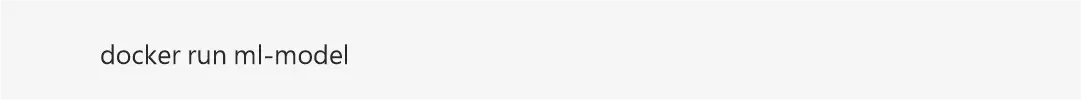

Once the image is created, you can run the container using:

Note: This script doesn’t expose any ports, as it runs totally inside the container and prints output to the terminal.

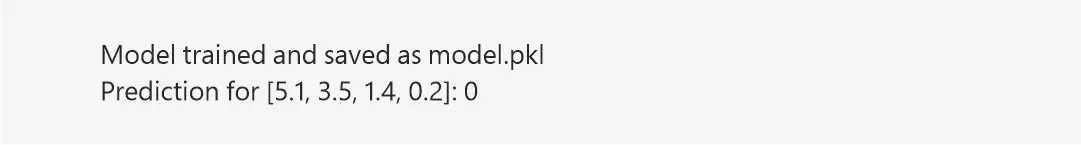

Step 7: Verify the Output

After running the container, you should see output similar to the following in your terminal:

This confirms that your Dockerized Python script successfully trained a model, saved it, loaded it again, and made a prediction—all within an isolated container.

Step 8: Push the Image to Docker Hub

To make your container image accessible from anywhere, push it to Docker Hub.

a. Log in to Docker Hub:

b. Tag your image:

c. Push the image:

You (or others) can now pull and run the image from any machine using:

Conclusion

An important skill that an aspiring Machine Learning Engineer must have is implementing machine learning models. Such devices as Docker standardize things and make it easier. Be it a small prototype or a large-scale AI, familiarity with how to train using Scikit-learn, serve using Flask, and containerize using Docker will put you in a good position, especially for real-world applications, certifications, and job readiness.

Ready to Go Further?

With Docker for ML deployment being the first step, you should look to explore Kubernetes for orchestrating your containers, investigate CI/CD pipelines, and also consider adding monitoring tools to represent your model's performance. By considering these next steps, you will be helping to provide robustness, scalability, and production readiness to your AI models.

Follow us: