Language Models such as GPT-4, Claude, Llama, etc., have changed the face of AI applications in industries. However, such models are trained on stagnant datasets, and usually, they do not deliver accurate and up-to-date answers when asked about recent happenings or specific areas. This is one of the main issues that led to the fast-growing popularity of Retrieval-Augmented Generation (RAG).

Before a response is produced, RAG enables AI systems to search the external sources of information that are relevant. According to the report by Grand View Research, the sector of RAG in the world market reached more than USD 1.2 billion in the year 2025 and is likely to attain more than USD 11 billion by the year 2030, signifying the crucial necessity of real-time, facts-based AI systems in diversified fields of work.

In this blog, we will explore what RAG is, why it matters, and what are the most prominent open-source frameworks to build smarter AI applications.

What Is the Retrieval-Augmented Generation Framework?

A RAG (Retrieval-Augmented Generation) model links big language models (LLMs) to outside information. It fetches pertinent data either through documents, databases, or APIs and adds the answers given by the model to them to provide more precise, factual answers, without the need to retrain the model.

Why Use a RAG Framework?

The RAG frameworks turn intelligent information by enabling AI to access real-time, external information, either in documents, databases, or websites. The AI is also now able to fetch new and more relevant information and respond more accurately rather than simply using what it was trained on. This is necessary when performing tasks like customer support, internal search tools, research assistants, and domain-specific chatbots.

Overview of Popular Open-Source RAG Frameworks

A number of open-source frameworks have been popular for creating RAG-based systems. They are not actual AI models but provide an intermediary between the already existing models and the retrievers and external information.

1. LangChain

LangChain is an open-ended framework that allows developers to implement LLM-driven workflows. It offers a modular architecture that facilitates chaining the modules, including retrievers, memory, prompt patterns, and agents.

Key Features:

2. LlamaIndex

LlamaIndex (formerly GPT Index) is building to empower LLMs to read and query both structured and unstructured data. It allows programmers to create indexes of PDFs, SQL databases, APIs, and even Notion pages.

Use Cases:

3. Haystack

Haystack is a full-stack RAG framework, built by Deepset, and contains components such as retrievers, readers, rankers, and generators.

Key Features:

4. RAGFlow

RAGFlow is built for precise and accurate question-answering and deep understanding of documents. It allows for grounded answers with citations and efficient workflows.

Key Features:

5. txtAI

txtAI is a minimalist framework that does semantic search and RAG pipeline work with offline capabilities, making it a good choice for low-scale applications.

Key Features:

6. Cognita

Cognita is a modular RAG framework that provides a no-code interface to create and deploy AI chatbots capable of document search and retrieval.

Key Features:

7. LLMWare

Specializing in Private and Compliant RAG systems with specific small models. LLMWare enables speedy development without expertise in ML.

Key Features:

8. STORM

STORM is a RAG framework specifically for research intended to support structured knowledge synthesis. It allows AI to derive cohesive responses from outline-based planning.

Key Features:

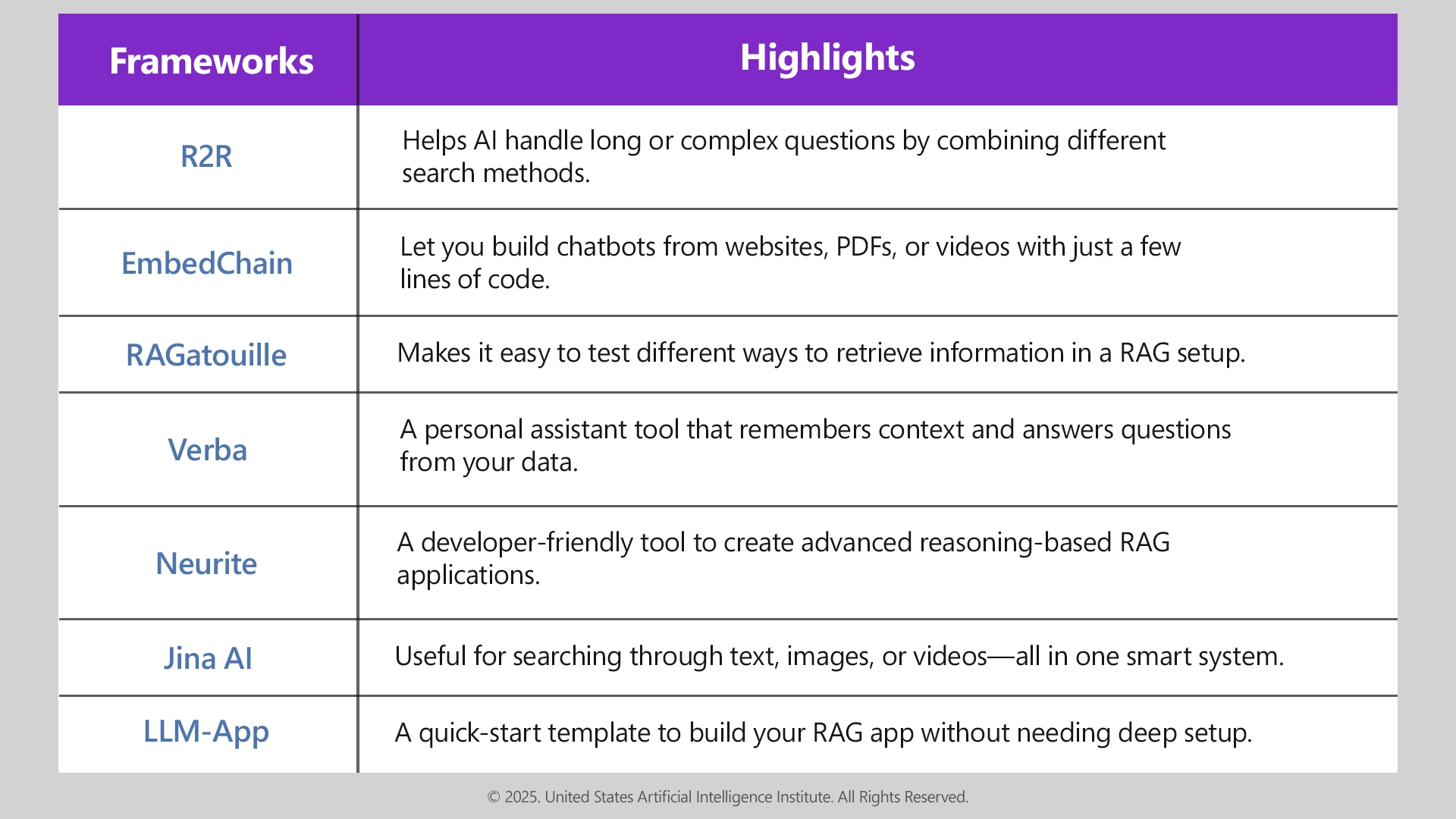

Other Notable RAG Frameworks

Download the latest USAII® guide on Agentic RAG to get your hands on the most contemporary evolution of AI Agents in RAG, its mechanism, and much more!

Key Challenges to Watch Out for When Building RAG Frameworks

The way to ensure that AI responses become smarter using Retrieval-Augmented Generation (RAG) is through the correct use of the technology. Weak setups or lax arrangements may lower production quality. These are a few mistakes that one should know about:

1. Indexing Irrelevant or Low-Quality Data

Putting all the documents, emails, or notes in your vector store overloads your system. This augments the probability of retrieving irrelevant or outdated information.

What to do: Select your sources—only index well-organized, trustworthy, and useful information.

2. Exceeding Token Limits

Language models have fixed context windows. If the total words in your prompt exceeds the limit, some parts are likely to get dropped, often without a message.

What to do: Limit the content you retrieve or use summarization to avoid exceeding token limits.

3. Prioritizing Quantity Over Quality in Retrieval

Retrieving a lot of documents could seem comprehensive, but the more documents you give back to the model that are not closely related to your query, the more they could dilute the model's understanding and decrease its response accuracy.

What to optimize for: Aim for precision. You would rather offer a few very relevant pieces of insight than thousands of documents that are loosely connected.

4. Lack of Logging and Observability

Debugging a model is intricate when the model gives a bad response, and you have no idea what went wrong. If you didn't track the steps—what documents were retrieved, how they were formatted, and what you sent to the model—then there is no way to recover from the bad response.

What to do: Keep low-level, detailed logs for each step of the RAG flow (user queries, retrieved documents, prompts, and responses). This level of detail will help you improve accuracy and reliability.

Final Thoughts

RAG frameworks represent a significant change in the way language models are approached. RAG, instead of considering them as predictors that remain fixed, enables us to develop dynamic and fact-based systems that remain context-conscious and up to date. And with the assistance of the open-source LangChain, LlamaIndex, Haystack, and more, it has never been easier to construct more intelligent, real-world AI apps.

Whether you are an AI engineer, developer, or business leader, learning and applying RAG is quickly becoming an expected competency. Whether you're upskilling with an AI course or pursuing machine learning certification, learning how to execute Retrieval-Augmented Generation is an important move towards creating the future of intelligent systems.

Follow us: