With industries adopting generative AI actively, mastering prompt engineering today is as important as learning to code, and can prove to be a game-changer for your career. Not just technical domains, mastering prompt engineering techniques can help you converse naturally with powerful AI systems.

Read along to effectively design, refine, and optimize prompts for better outputs, smarter workflows, and creative innovation.

Designing Prompts with Context Matters

Designing effective prompts is just one aspect. What truly matters is understanding and applying context to your prompts. Advanced prompt engineering techniques involve more than only creating questions or giving commands but require shaping how AI interprets and responds depending upon the intent of the user, their prior interactions, training data, behavior of the model, and other factors.

Therefore, context engineering becomes an important element of prompt engineering that uses different kinds of techniques and guides models to deliver more relevant and accurate outputs. No matter what the application is, be it generating code, creating content, analyzing data, etc., the prompt engineers should design it with context to boost LLMs' performance and achieve reliable results.

If you are worried about staying relevant in an AI-driven future, then the ‘AI Prompt by Dr. Milton Mattox’ is your personalized tool to assess your current AI skill obsolescence, align with global AI standards, and future-proof your career with a suggested plan to grow to become invincible by 2030.

This personalized guide will help you follow step-by-step prompts to assess your skills and learn if you are future-proof, along with a step-by-step action plan and personalized recommendations.

Don’t wait to fall behind. Download the AI Prompt guide and take charge of your AI career future.

Types of Prompts: Communicating Effectively with AI

A prompt is the input or instruction the user gives to the AI model, such as a large language model (LLM), to get the desired output. Prompt defines the task, sets the tone, and frames the context for the model’s response.

How good your prompt is will define how relevant, accurate, or useful the result you will get.

Prompts are mainly of three main types:

Direct Instructions: They are clear and concise and ideal for tasks where the user wants a defined outcome.

Example: “Write a product description for a smartwatch”.

This prompt guides AI on exactly what format and content to generate.

Open-ended Prompts: These are broader and allow users to create or interpret responses. These are great for storytelling or brainstorming.

Example: “What do you think the future of transportation looks like?”

Here, the AI might explore topics like electric vehicles, hyperloops, or space travel.

Task-specific Prompts: These are more precise prompts and are used for defined goals such as translation, summarization, or coding.

Example: “Summarize this article in two sentences.”

This prompt is designed for a particular outcome and may include extra context or examples.

So, by learning these prompt types, you can learn the properly utilize the power of AI to generate more accurate and meaningful responses.

Key Prompt Engineering Techniques

Prompt engineering techniques are strategic methods used by prompt engineers or other users to craft effective prompts/instructions for AI models like GPT-4, Google Gemini, and others. These techniques are used to guide generative AI systems to deliver accurate, relevant, and context-friendly outputs as per desired goals.

Prompt engineering leverages the capabilities of large language models (LLMs) to perform various kinds of tasks, from summarization to translation to creative writing.

Experimenting with different prompt structures helps users to shape model behavior and optimize their output. As generative AI is becoming a must-have technology across many fields, mastering prompt engineering can be very beneficial in unlocking its full potential and achieving better results.

To ensure AI models are most effective, prompt engineering employs different techniques customized according to specific tasks and objectives.

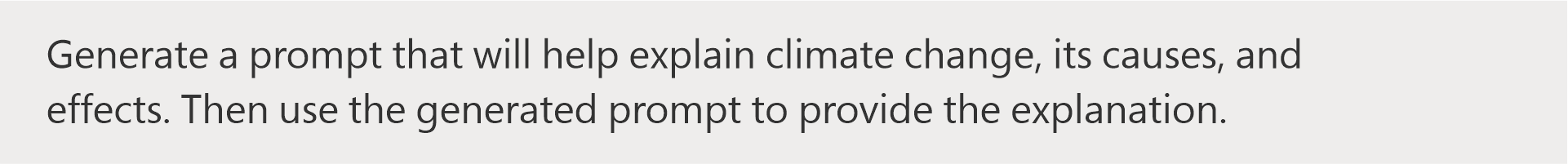

Let’s understand this with the following example

Ask the model to perform a task without examples.

Include a few examples to guide tone and structure.

Guide the model to reason step-by-step.

Ask the model to write or improve its own prompt.

Generate multiple answers and pick the best one.

Have the model gather background before answering.

Use a series of prompts where one output feeds the next.

Example prompt:

Next prompt is based on previous prompt

Next prompt again is based on the previous one

Explore multiple ideas before choosing one.

Pull in external data to answer.

Use tools (e.g., calculators, APIs) for reasoning.

Let the model generate optimized prompts and use them.

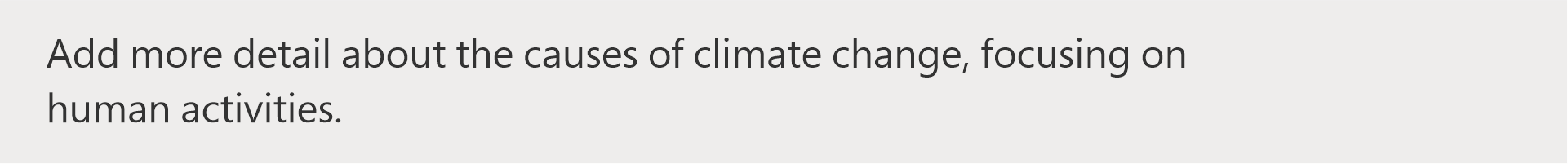

Refine prompts iteratively based on output.

Initial prompt example:

Follow up prompt

The prompt adapts in real-time to intermediate outputs, gradually enhancing the response through iterative refinement

Guide the response tone or lens.

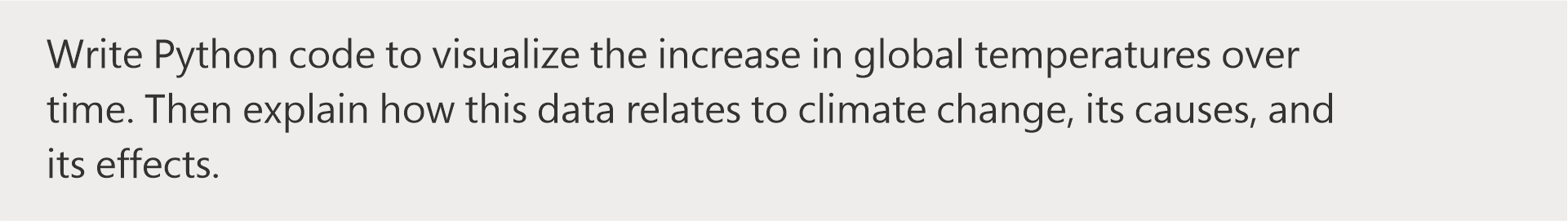

Combine programming with natural language.

Model reasons, retrieves, and refines.

Ask the model to critique and revise its own output.

Use images/audio with text reasoning.

Use images/audio with text reasoning.

These are the different types of prompt engineering techniques that have their own unique way to guide AI responses. You can apply the above-mentioned task across various generative AI models like ChatGPT, Claude, Gemini, IBM Granite, etc., to see how outputs differ in each of these models. You can experiment and discover what works best for you.

Challenges to Prompt Engineering Techniques

Prompt engineering can be very powerful; however, it comes with a few challenges that must be addressed.

It is obviously difficult to create prompts that consistently yield accurate and reliable results, especially for complex tasks requiring high reasoning, common sense, and subtle interpretation.

AI hallucination is a big problem that leads generative AI to produce incorrect or fabricated information.

While using structured templates or fine-tuning models can help you reduce these issues, prompt engineers should focus on designing prompts that can perform well across different contexts using trial-and-error methods. Achieving the perfect balance between the infinite capabilities of AI and the specific requirements of the user is quite challenging.

Applications of Prompt Engineering Techniques and Future Prospects

Prompt engineering can be effectively used in almost all industries. In chatbots, they are used to improve the response quality and for real-time interaction, whereas developers use them to generate code and explain programming concepts.

Similarly, in the education sector, prompt engineering is applied to simplify explanations and solve problems. From creating powerful content to customer support, applications are huge.

As we move towards the future, prompting engineering will become a major field in the AI domain, which will evolve with smarter NLP capabilities and enable AI to handle complex tasks with less input. Getting into this specialised field shall require credible AI Prompt engineering certifications to boost your employability and garner massive traction from global industry giants.

With new tools coming up, we can expect them to streamline prompt design and make AI interactions more intuitive, personalized, and effective across all industries.

Follow us: