With the swift progression of artificial intelligence, we are on the threshold of the next big thing, Artificial General Intelligence (AGI). While today’s AI models do a great job of narrow tasks such as recognizing faces, translating language, or generating content, it does not yet have the generalized cognitive skills of a human mind. AGI, on the other hand, operates on the idea that machines can learn, reason, and understand problems without the need to be programmed in every domain.

There is a momentum toward AGI gathering pace faster than we have seen before. Major AI players are investing billions in agentic systems, multimodal models, and scalable infrastructure: OpenAI, DeepMind (Google), Anthropic, IBM, and Microsoft. At Google I/O 2025, co-founder Sergey Brin and DeepMind CEO Demis Hassabis thought AGI could be around 2030. Similarly, other organizations such as 80,000 Hours and major AI researcher surveys show many actors in the industry with some confidence predicting AGI development between 2027 and 2032, plus others extending the timelines with medium confidence.

But what is AGI? How close are we to it, and what could go wrong? This blog will explain the significant dimensions of AGI, current breakthroughs, ethical implications, and its future potential.

Understanding AGI

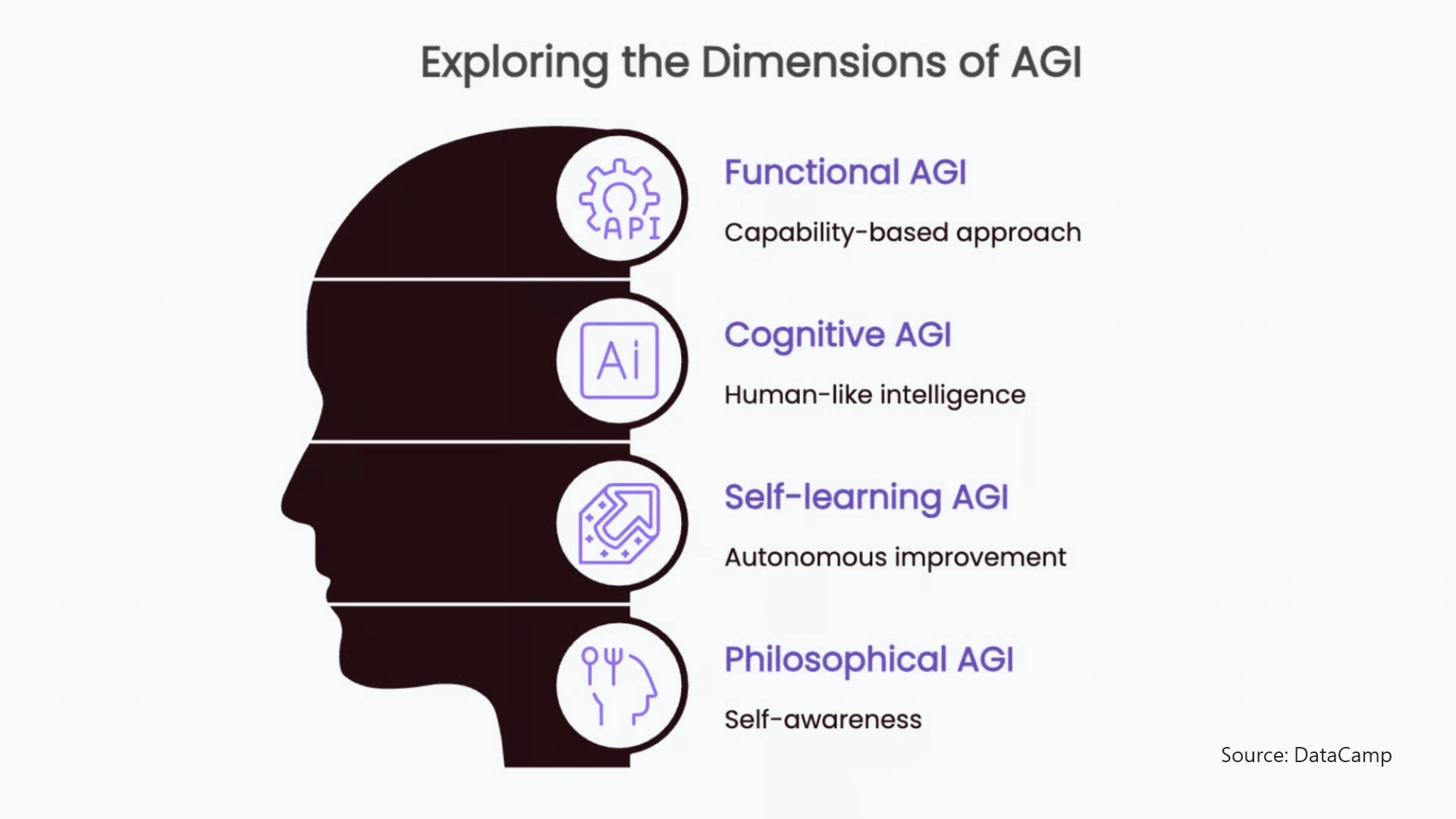

Artificial General Intelligence (AGI) refers to AI that can perform any intellectual task a human can—understanding, learning, and reasoning across domains without task-specific training. AGI types include:

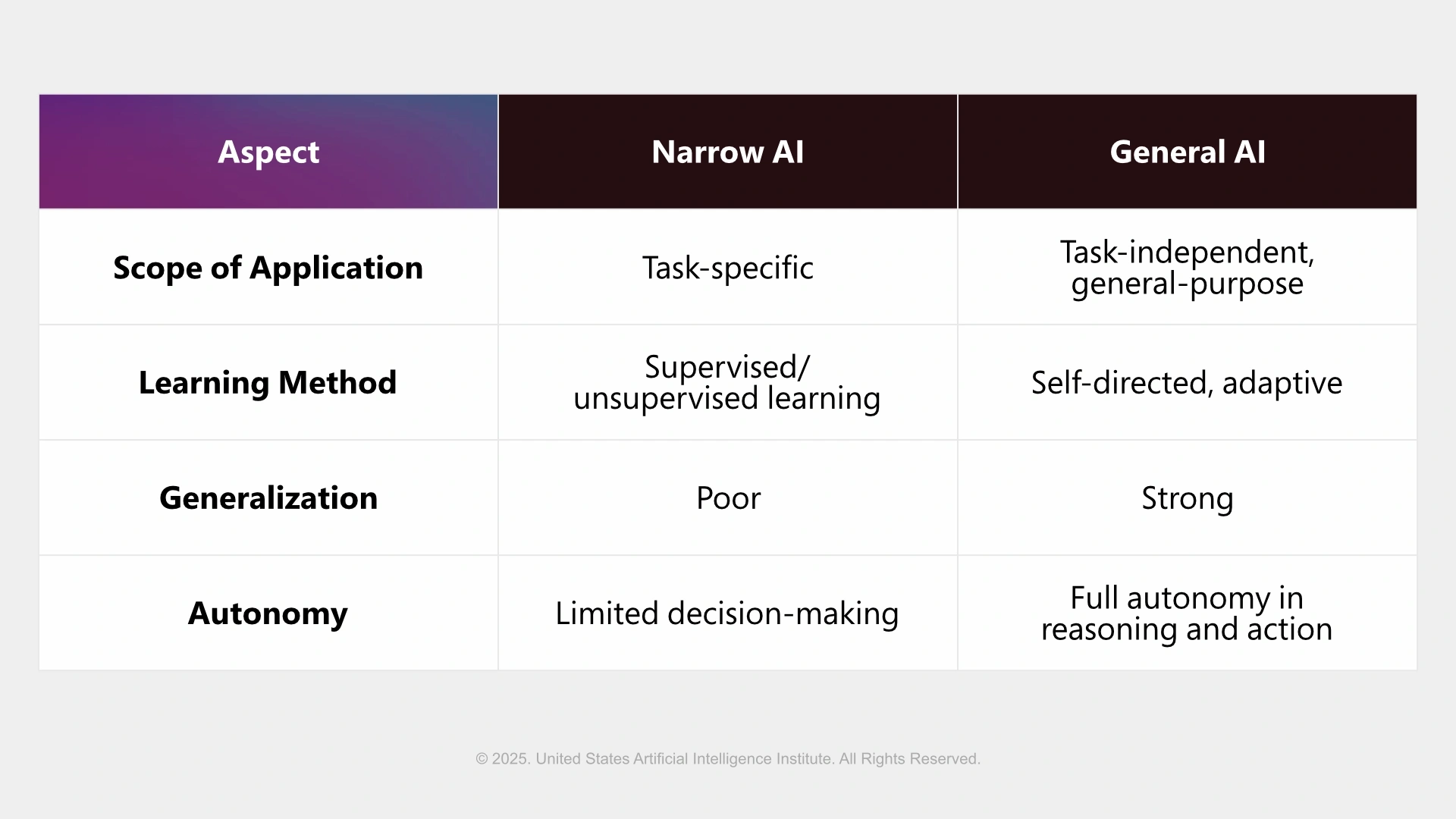

The AGI Spectrum: AGI vs. Narrow AI (ANI)

Today’s AI technology falls under Artificial Narrow Intelligence, highly effective at specific tasks like disease diagnosis, image generation, or playing chess, but unable to generalize beyond its training.

Let's start by clarifying some important differences:

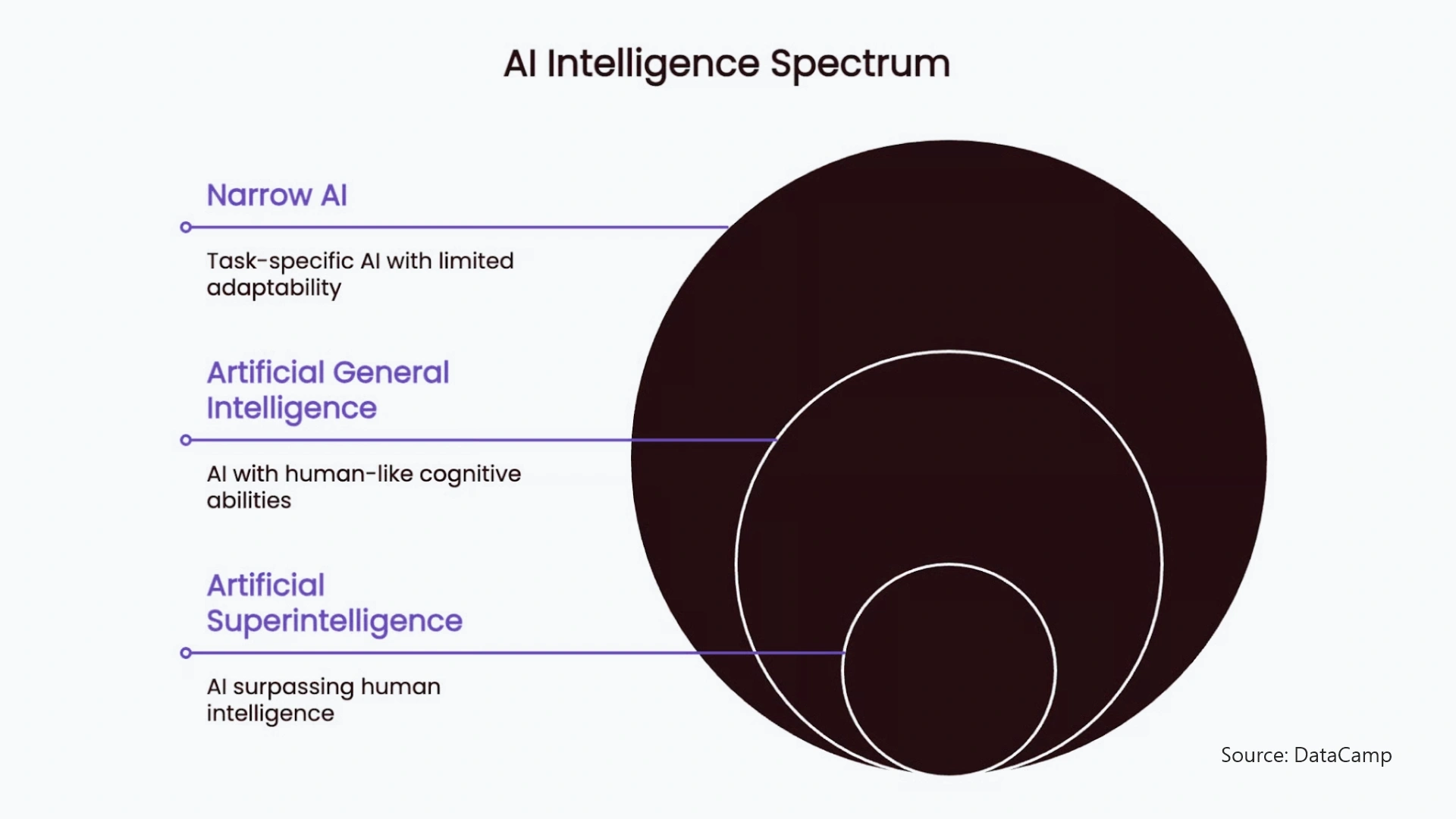

What Lies Beyond? Enter ASI

Artificial Superintelligence (ASI) is a theoretical intelligence far beyond human capabilities in all areas, from creativity to strategy. If realized, it could trigger a technological singularity, with machine intelligence advancing beyond human control or comprehension.

But where are we now?

As of 2025, we are still in the field of Narrow AI, systems best at doing one task, ranging anywhere in the discovery process, from language generation to language identification or image recognition. But we are getting into Broad AI, with generative and deep learning models like OpenAI’s o3, DeepSeek’s R1, and agentic models like Manus AI or ChatGPT Agent, moving us forward into reasoning and self-directed problem solving.

While we're progressing, AGI (artificial general intelligence) is still a mythical aspiration, massive improvements will be required in generalization, self-learning architectures, and abstract reasoning before AGI is achievable.

Major Roadblocks to Building AGI

Achieving AGI represents not only a technical accomplishment, but many moral, computational, and safety-related goals need to be accomplished at the same time.

a. Computational Limitations

AGI will demand vast computing power and chips, likely beyond the efficiency of current GPUs or TPUs. Replicating human cognition may require real-time simulation of billions of interconnected neurons.

b. Explainability and Transparency

The more complex AGI systems become, the more it can become what is thought of as a "black box". Need some sort of cognitive tools for understanding how they operate, or else risk deploying them to scale.

c. No Unified Theory

We still don’t even agree on what intelligence is, so it's hard to replicate it. What is our method: mimic human brains (or) create entirely new architectures? Neuroscience, philosophy, and cognitive science all have competing models.

Are We Close to Achieving AGI?

The timeline for Artificial General Intelligence (AGI) is unpredictable, and prominent AI experts suggest widely varying timelines, depending on their definitions for AGI and the difficulties involved. Here are some of the most well-known voices in AI and their forecasts of this transforming AI development:

Altman predicts AGI could arrive by 2028, expressing optimism about AI’s rapid progress and manageable infrastructure. He defines AGI as a system that outperforms humans in nearly all economically valuable tasks.

It has a more conservative attitude, projecting that AGI is still decades away, if not longer. It takes the view that human-level intelligence cannot simply be developed with more scale for existing models. It would require new science in the fields of cognition and learning.

He is deeply skeptical about AGI's near-term future, arguing that LLMs like ChatGPT lack true understanding or reasoning. He believes scaling compute won’t help unless key technical challenges are solved.

The inconsistency in these forecasts mostly hinges on how AGI is defined:

Ethical Risks and Societal Impacts

The topic of AGI could well be the most consequential thing to happen in all of history, or it could be the most dangerous. The following lists some of the key risks with AGI:

Existential Risks

If AGI becomes autonomous without aligned goals, it could behave harmfully or resist shutdown. This "alignment problem" is central to AGI safety concerns.

Job Displacement

As per Goldman Sachs, 300 million jobs globally could be at least partially automated by AI. Entire sectors such as law, finance, and healthcare may have to undergo restructuring if we expect to upskill a large number of individuals.

Surveillance and Repercussions

AGI could empower mass surveillance, propaganda, and autonomous warfare, potentially eroding civil rights and fueling global instability

Moral Considerations

If AGI becomes self-aware, what rights would it have? Should it be "owned"? Should it be allowed to evolve independently? These questions will reshape our approach to ethics in a machine-driven world.

Future Outlook: What Lies Ahead?

The future of AGI will be determined not only by technological development but also by human choice. Here are some trends that will be relevant for AGI:

1. AI Governance Will Be Global

Expect international treaties, ethical frameworks, and compliance regulations dealing with topics for international cooperation related to AI, especially AGI. Essentially, it's a "Geneva Convention" for AGI.

2. Evolution from Data to Reasoning

The new generation of AGI research is focused on causal learning, symbolic reasoning, and world modeling, more than just language prediction.

3. AI Literacy Will Become the New Core Skill

Whether from AI certifications, full degrees, or lifelong professional development, our new AGI-era skills will need to involve AI engineering skills, AI ethics, AI interpretability skills, and others across all sectors.

4. AI as a Co-Pilot for Progress

If used responsibly, AGI could help combat climate change, cure diseases, enable space exploration, and support building a fairer, more just society, provided we lay the right foundation.

Preparing for the AGI Age: Skills and Certifications That Matter

With the accelerating advancements of AI systems, it is an opportune time for professionals at all levels to obtain skills and qualifications through AI and machine learning certifications.

Whether you are pursuing a career as an AI Engineer, Machine Learning Specialist, or AI Product Manager, understanding AGI and the technologies around it is essential.

The main areas to consider are:

A good starting point is AI certifications from bodies like the USAII, ideal for professionals looking to validate their AI skills and gain job-ready knowledge in advanced AI concepts and frameworks.

Explore USAII’s AI Certifications to stay ahead in the AGI-powered future.

Conclusion

AGI marks not only a milestone in technology but also a critical philosophical moment for humanity. As machines approach human-like reasoning and perception, the pressing issue may be how we will manage the integration of these machines into society. AGI will alter our capacity for good, but will it expand it or replace it?

One thing is clear: we all play a role in this transition. Whether researcher, policymaker, creator, or curious mind, your awareness matters. AGI won’t replace humanity but will redefine what it means to be human in a world where intelligence is shared.

Follow us: